crossposted from willowgarage.com

This summer, Alex Teichman of Stanford University worked on an image descriptor library, and used this library to develop a new method for people to interact directly with the PR2. Using a keyboard or a joystick is great for directly controlling a robot, but what if an autonomous robot wanders into a group of people -- how can they affect its behavior?

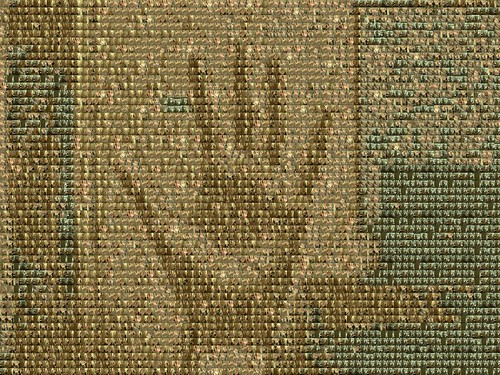

Alex's approach allows the PR2 to "talk

to the hand," as many of us cruelly experienced in middle school. In the video, Alex demonstrates that the PR2 can be made to

stop and go by simply holding a hand up to the stereo camera. To accomplish this, Alex used a machine-learning algorithm called

Boosting along with image descriptors, such as the color of local

regions and object edges. He was able to train his algorithm on a data set that was labelled using the Amazon Mechanical Turk library that Alex Sorokin developed. This Mechanical Turk library harnesses the power of paid volunteers on the Internet to perform tasks, like identifying hands in images so that algorithms like Boosting can be trained.

Hand detection is part of a larger effort that Alex Teichman has been working on, developing a library with a common interface to image descriptors. This library, descriptors_2d, enables ROS developers to use easily use descriptors like SURF, HOG, Daisy, and Superpixel Color Histogram.

You can learn more about the hand detection techniques and image descriptors in Alex's final summer presentation (download PDF from ROS.org).

Leave a comment