Recently in mobile robots Category

Greetings!

We would like to announce that TurtleBot got major(and minor) updates in both software and hardware. These updates are final (apart from bugfixes) and won't change api for indigo.

Turtlebot2 has equipped with better navigation skills, so it can now navigates a lot better with tuned parameters and by Asus Xtion Pro as default sensor.

It supports integrated interaction tools, Remocon & Interactions as new ways to interact with robots.

Turtlebot2 now can be easily installed and repaired by Turtlebot2 ISO to create Turtlebot2 netbook easily. Check Turtlebot Installation Tutorial.

In addition, there are miscellaneous updates in configuration, simulation, and multi robot implementation. Please see Migration Guide for the details.

Cheers,

TurtleBot Team

The ROS simulation of PAL Robotics mobile base, named PMB-2, is available now and ready to download! You will find all the steps in the ROS wiki.

This mobile base is the one used in TIAGo, the mobile manipulator. Now it is also available independently, and shipping for first units is starting in a few months - in October! PMB-2 is 100% ROS based and can be fully customized.

PMB-2 has a maximum speed of 1 m/s and can move around with its traversable step and traversable gap of 1.5 cm. The mobile base can carry anything on top, with a payload of 50 Kg. PMB-2 features our custom suspension system, making it handle larger irregularities of the floor.

You can watch PMB-2 first prototype in action in this video, where there are more features explained.

We would like to announce the recent completion of the Rapid

Autonomous Complex-Environment Competing Ackermann-steering Robots

(RACECAR) class. RACECAR is a new MIT Independent Activities Period

(IAP) course focused on demonstrating high-speed vehicle autonomy in

MIT's basement hallways (tunnels). The MIT News Office published an

overview article with video at: https://newsoffice.mit.edu/

Instructors provided student teams with an model car outfitted with sensors, embedded processing, and a ROS-based software infrastructure. The base platform is a Traxxas 1:10-scale radio-controlled (RC) brushless motor rally car that is capable of reaching 40+ mph speeds. The sensor suite consists of a Hokuyo 10m scanning lidar, Pixhawk's PX4Flow optical flow camera, a Point Grey imaging camera, and SparkFun's Razor inertial measurement unit. Control and autonomy algorithms are processed on-board with an embedded NVIDA Jetson TK1 development kit running the Ubuntu Linux operating system with "The Grinch" custom kernel. The TK1's pulse width modulation (PWM) output signals drive the motor electronic speed controller and steering servomotor, bypassing the RC receiver.

The system uses the Robot Operating System (ROS) framework to facilitate rapid development. Existing ROS drivers (urg_node, razor_imu_9dof, pointgrey_camera_driver, and px4flow_node) receive data from the sensors. The model car's throttle and steering signals are commanded with a new ROS driver interface to the kernel's sysfs-based PWM subsystem. Students develop software and visualize data through a wireless network connection to a virtual machine running on their personal laptops.

Given the hardware platform and basic teleoperation software stack, teams of 4 -5 students prototype autonomy algorithms over an intense two week period. Students are invited to explore a variety of navigation approaches, from reactive to map-based control. At the end of the class, the teams' solutions are tested in a timed race around a closed-circuit course in MIT's tunnels. In January 2015, three of four teams reached the finish line with the winning team's average speed exceeding 7mph.

We would like to thank the many contributors to ROS and, in particular, Austin Hendrix for hosting the armhf binaries at the time.

RACECAR Instructors

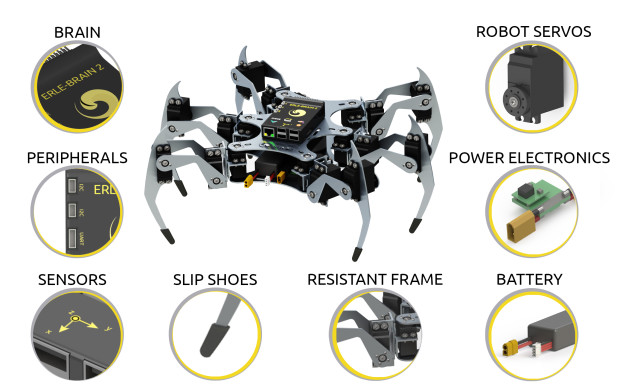

is a customized version of the Robotis Bioloid kit. It uses low-cost,

light-weight 2D Scanning technology available from Rhoeby Dynamics (based

on the TeraRanger 1DOF sensor), and supports SLAM, Navigation and Obstacle

Avoidance.

* Robotis Bioloid "Spider" chassis (with custom legs)

* Robotis Cm9.04 MCU board

* Nexus 4 phone provides IMU (and tele-prescence camera)

* Rhoeby Dynamics 2D Infra-Red LiDAR Scanner

* Bluetooth link to robot for command and status

* Remote laptop running ROS Indigo

Software Features

* 3-DOF Inverse Kinematic leg control

* Holonomic-capable gait

* Odometric feedback

* 2D LiDAR scanning

* ROS node for robot control

If interested, you could find more information at:

http://wiki.ros.org/Robots/

And see our website at:

http://wwww.rhoeby.com

- Kobuki Mobile Base

- 3D Depth Camera

- Inforce 6410 Single-board Computer or better

- 9" Screen or Larger

- Servos

- Microphone

- Speaker

- Humidity Sensor, Temperature Sensor, CO2 Meter

- Wireless Z-Wave Plus

- Zigbee

- BLE

- Wi-Fi

- Facial Recognition

- Object Recognition

- Speech Recognition

- Natural Language Processing

- And more

- Android

- iOS

From Colin Adamson of Xaxxon Technologies

Oculus Prime is a new mobile robot from Xaxxon Technologies, available as a build-it-yourself kit, or fully assembled and calibrated. The aim of the project is to be both a low cost platform for getting going with ROS, and a capable internet controlled vehicle for remote monitoring.

It features an auto-docking charging station, four wheel gear-motors including rotational encoder, a 3-axis gyro, a large capacity LiPO battery, mini-ITX desktop PC internals, WiFi and bluetooth connectivity, tilting camera and lights, 2-way audio, mounting for an Xtion depth sensor, and always-on reliability.

It currently supports the ROS Indigo navigation stack running on Xubuntu 14.04. Near-future software enhancement plans include serving up the map via HTTP to internet connected clients, so initial pose and waypoints can be set within our standard web browser tele-op interface.

Software is fully open source of course, and the motors/gyro controller PCB is open hardware. The tough ABS frame is easy to assemble (and disassemble) with snap-together connections. We plan on offering interchangeable frame parts for swapping the Xtion with other sensors, depending on demand, as well as generic upper mounting plates.

For more information:

Product page: www.xaxxon.com/

ROS wiki portal page: wiki.ros.org/Robots/

Get in touch for more info: www.xaxxon.com/contact

|

Thalmic Labs and Clearpath Robotics have joined forces to prove gesture controlled robots are possible. Thalmic Labs, developers of Myo Gesture Control, released the Myo alpha developer unit to Clearpath Robotics for testing. Clearpath has successfully integrated the Myo armband with their Husky Unmanned Ground Vehicle to start, stop and drive the vehicle using simple arm movements.

"There are a lot of interesting applications for using the Myo for robot control and our team is very excited to have the opportunity to work with the Alpha dev unit," said Ryan Gariepy, Chief Technical Officer at Clearpath Robotics. "We've been eagerly following Thalmic's progress and we've got a dozen different robots here we could do some more tests with."

Clearpath Robotics used the Robot Operating System (ROS) for most of the integration work. The Husky software package exposes a standard Twist interface, so the team was required to convert the Myo data into that format to create compatibility. The team did so by using their experimental cross-platform serialization server in socket mode.

For Myo integration and development, Clearpath Robotics added standard Windows Socket code into the provided Thalmic example code, and then determined the proper mapping from the Myo data to the desired robot velocity using timeouts and velocity limits. Further details on Myo integration cannot be released at this time.

Q.bo, the open source hardware/software mobile robot from Thecorpora, is now available for order as as Basic or Complete kits (ranging from €499.00-€2,299.00). A basic Q.bo features HD webcams and can be upgraded with ultrasonic and Xtion Pro sensors.

Congrats to Thecorpora on the Q.bo launch!

It looks like the TurtleBots at ClearPath Robotics are having some springtime fun. I wonder if there are any TurtleBot easter eggs you can find.

In the Robot Roundup section of MAKE Volume 27, we featured the TurtleBot hobby platform as a great reasonably priced open source robotics kit. (Check out our review on page 77.) Now, the fine folks at TurtleBot are sharing project builds on our DIY wiki, Make: Projects. To date, there are eight TurtleBot projects, and there's now a TurtleBot topic page nested under the Robotics category on the site.

Read more on the Make Blog.

Announcement from Ryan Gariepy of Clearpath Robotics

Here at Clearpath, we've been busy shipping hordes of TurtleBots to the four corners of the earth. We're really excited to see what all of you are doing with yours, so we thought we'd show everyone what happened when we looked the other way and let them take over the office. Enjoy!

All of the code shown there has been released in the new clearpath_turtlebot stack. This stack is dedicated to giving you more to do with your TurtleBot from the moment you receive or assemble it.

Current demos include person-following, TurtleBot following, and random exploration. Demos do not involve map-building or other complex techniques, making them ideal for understanding what's going on under the hood. If TurtleBot is how you're starting out in robotics, take a look!

As always, fully assembled and tested TurtleBots can be ordered from Clearpath Robotics.

Crossposted from WillowGarage.com

Last month, we introduced TurtleBot, a low-cost robot built on open source software. Today we're pleased to announce that the TurtleBot hardware is going open source!

We've had a great response to TurtleBot from hobbyists and developers, including a lot of interest from people who want to build their own robots, starting with components they already have. And we're delighted to see similar ROS-based low-cost mobile robots like Bilibot, POLYRO, and PrairieDog. So we decided that we can best support this emerging community by publishing all the information needed to build your own TurtleBot. Following the recently established Open Source Hardware (OSHW) definition, we will make available part numbers, CAD drawings for laser-cut hardware, board layouts and schematics, and all the necessary documentation.

Everything is coming together at turtlebot.com, a portal for all things TurtleBot and a place for the community to exchange ideas, including your own designs. To stay up to date as the site develops, subscribe to the TurtleBot announcement list.

We're currently lining up suppliers of components; if you're interested in selling TurtleBot parts, send email to info@turtlebot.com.

|

|

Coroware has announced support for ROS on their CoroBot and Explorer mobile robots. They will be supporting ROS on both Ubuntu Linux and Windows 7 for Embedded Systems and plan to start shipping with ROS in the second quarter of this year.

"CoroWare's research and education customers are asking for open robotic platforms that offer a freedom of choice for both hardware and software components," said Lloyd Spencer, President and CEO of CoroWare. "We believe that ROS will futher CoroWare's commitment to delivering affordable and flexible mobile robots that address the needs of our customers worldwide."

In order to get their users up and running on ROS, Coroware will be using hosting a "ROS Early Adopter Program" using their CoroCall HD videoconferencing system.

For more information, you can see CoroWare's press release, or you can visit robotics.coroware.com.

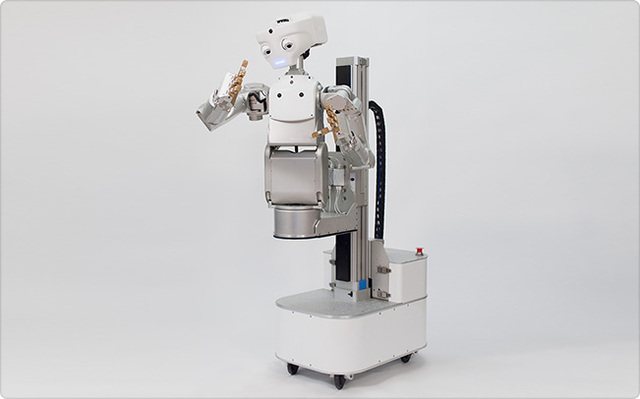

Congrats to Meka on the official announcement of their M1 Mobile Manipulation platform! The M1 integrates many existing Meka hardware products into a unified mobile manipulation platform. This includes:

- S3 Sensor Head with Kinect-compatible interface and 5MP Ethernet camera

- Two A2 Compliant Manipulators with 6-axis force-torque sensors at the wrist

- Two G2 Compliant Grippers

- B1 Omni Base with Prismatic Lift and Computation Backpack

Meka M1 Mobile Manipulator vimeo from Meka Robotics on Vimeo.

As we've previously featured, Meka supports integration with ROS on their various robot hardware products. They are looking to take that a step further with the new M1 platform. They will start "pushing deeper on ROS integration", which means integrating the M1 with many higher-level ROS capabilities that the community has built. Users will still be able to take advantage of the great real-time capabilities that their M3 software system provides.

The M1 joins the growing ROS community of mobile manipulation platforms: Care-O-bot 3, PR2, and DARPA ARM robot. We're excited that the users of these various hardware platforms will be able to easily collaborate and push new bleeding-edge capabilities in ROS.

The M1 is inspired by Georgia Tech's Cody, which is also built using Meka components. The M1 will cost $340k for the standard model, but the modular design enables Meka to develop customized solutions as well.

All,

I would like to announce the availability of a simple driver for the Neato Robotics XV-11 for ROS. The neato_robot stack contains a neato_driver (generic python based driver) and neato_node package. The neato_node subscribes to a standard cmd_vel (geometry_msgs/Twist) topic to control the base, and publishes laser scans from the robot, as well as odometry. The neato_slam package contains our current move_base launch and configuration files (still needs some work).

I've uploaded two videos thus far showing the Neato:

I also have to announce our repository, since we've never officially done that: albany-ros-pkg.googlecode.com

I hope to have documentation for this new stack on the ROS wiki later today/tonight.

Mike Ferguson

ILS Social Robotics Lab

SUNY Albany

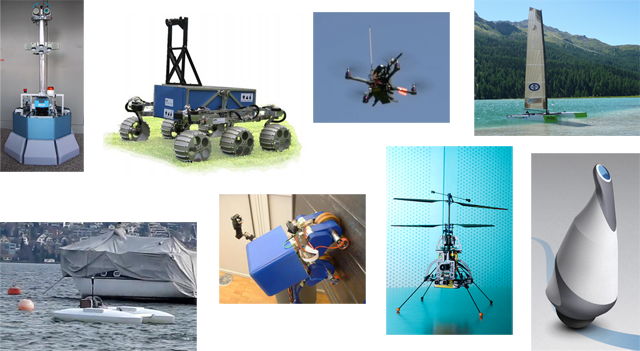

The Autonomous Systems Lab (ASL) at ETH Zurich is interested in all kinds of robots, provided that they are autonomous and operate in the real world. From mobile robots to micro aerial vehicles to boats to space rovers, they have a huge family of robots, many of which are already using ROS.

As ASL is historically a mechanical lab, their focus has been on hardware rather than software. ROS provides them a large community of software to draw from so that they can maintain this focus. Similarly, they run their own open-source software hosting service, ASLforge, which promotes the sharing of ASL software with the rest of the robotics community. Integrating with ROS allows them to more easily share code between labs and contribute to the growing ROS community.

The list of robots that they already have integrated with ROS is impressive, especially in its diversity:

- Rezero: Rezero is a ballbot, i.e. a robot that balances and drives on a single sphere.

- Magnebike: Magnebike is a compact, magnetic-wheeled inspection robot. Magnebike is designed to work on both flat and curved surfaces so that it can work inside of metal pipes with complex arrangement. A rotating Hokuyo scanner enables them to do research on localization in these complex 3D environments.

- Robox: Robox is a mobile robot designed for tour guide applications.

- Crab: Crab is a space rover designed for navigation in rough outdoor terrain.

- sFly: The goal of the sFly project is to develop small micro helicopters capable of safely and autonomously navigating city-like environments. They currently have a family of AscTec quadrotors.

- Limnobotics: The Limnobotics project has developed an autonomous boat that is designed to perform scientific measurements on Lake Zurich.

- Hyraii: Hyraii is a hydrofoil-based sailboat.

That's not all! Stéphane Magnenat of ASL has contributed a bridge between ROS and the ASEBA framework. This has enabled integration of ROS with many more robots, including the marXbot, handbot, smartrob, and e-puck. ASL also has a Pioneer mobile robot using ROS, and their spinout, Skybotix, develops a coax helicopter that is integrated with ROS. Not all of ASL's robots are using ROS yet, but there is a chance that we will soon see ROS on their walking robot, autonomous car, and AUV.

ASL has created an ASLForge project to provide ROS drivers for Crab, and they will be working over the next several months to select more general and high-quality libraries to release to the ROS community.

ASL's family of robots is impressive, as is their commitment to ROS. They are single-handedly expanding the ROS community in a variety of new directions and we can't wait to see what's next.

Many thanks to Dr. Stéphane Magnenat and Dr. Cédric Pradalier for help putting together this post.

Qbo is a personal, open-source robot being developed by Thecorpora. Francisco Paz started the Qbo project five years ago to address the need for a low cost, open-source robot to enable the ordinary consumer to enter the robotics and the artificial intelligence world.

A couple months ago, Thecorpora decided to switch their software development to ROS and have now acheived "99.9%" integration. You can watch the video below of Qbo's head servos being controlled by the ROS Wiimote drivers, as well as this video of the Wiimote controlling Qbo's wheels. Their use of the ROS joystick drivers means that any of the supported joysticks can be used with Qbo, including the PS3 joystick and generic Linux joysticks.

Qbo's many other sensors are also integrated with ROS, which means that they can be used with higher-level ROS libraries. This includes the four ultrasonic sensors as well as Qbo's stereo webcams. They have already integrated the stereo and odometry data with OpenCV in order to provide SLAM capabilities (described below).

It's really exciting to see an open-source robot building and extending upon ROS. From their latest status update, it sounds like things are getting close to done, including a nice GUI that lets even novice users interact with the robot.

Qbo SLAM algorithm:

The algorithm can be divided into three different parts:

The first task is to calculate the movement of the robot. To do that we use the driver for our robot that sends an Odometry message.

The second task is to detect natural features in the images and estimate their positions in a three dimensional space. The algorithm used to detect the features is the GoodFeaturesToTrackDetector function from OpenCV. Then we extract SURF descriptors of those features and match them with the BruteForceMatcher algorithm, also from OpenCV.

We also track the points matched with the sparse iterative version of the Lucas-Kanade optical flow in pyramids and avoid looking for new features in places where we are already tracking another feature.

We take the images to this node from image messages synchronized and send a PointCloud message with the position of the features, their covariance in the three coordinates, and the SURF descriptor of the features.

The third task is to implement an Extended Kalman Filter and a data association algorithm based in the mahalanobis distance from the CloudPoint seen from the robot and the CloudPoint of the map. To do that we read the Odometry and PointCloud messages and we send also an Odometry message and a PointCloud message with the position of the robot and the features included in the map as an output.

Robotino is a commercially available mobile robot from Festo Didactic. It's used for both education and research, including competitions like RoboCup. It features an omnidirectional base, bumps sensors, infrared distance sensors, and a color VGA camera. The design of Robotino is modular, and it can easily be equipped with a variety of accessories, inluding sensors like laser scanners, gyroscopes, and the Northstar indoor positioning system.

REC has been supportive of the Openrobotino community, which provides open-source software for use with the Robotino, and now, they are providing official ROS drivers in the robotino_drivers stack. Their current ROS integration already supports the ROS navigation stack, and you can watch the video below that shows the Robotino being controlled inside of rviz.

We're very excited to see commercially available robot hardware platforms being support with official ROS drivers. There are over a thousand Robotino systems around the world and we hope that these drivers will help connect the Robotino and ROS communities.

I Heart Robotics has released a rovio stack for ROS, which contains a controller, a joystick teleop node, and associated launch files for the WowWee Rovio. There are also instructions and configuration for using the probe package from brown-ros-pkg to connect to Rovio's camera.

I Heart Robotics has released a rovio stack for ROS, which contains a controller, a joystick teleop node, and associated launch files for the WowWee Rovio. There are also instructions and configuration for using the probe package from brown-ros-pkg to connect to Rovio's camera.

You can download the rovio stack from iheart-ros-pkg:

http://github.com/IHeartRobotics/iheart-ros-pkg

As the announcement notes, this is still a work in progress, but this release should help other Rovio hackers participate in adding new capabilities.

Bosch's Research and Technology Center (RTC) has a Segway-RMP based robot that they have been using with ROS for the past year to do exploration, 3D mapping, and telepresence research. They recently released version 0.1 of their exploration stack in the bosch-ros-pkg repository, which integrates with the ROS navigation stack to provide 2D-exploration capabilities. You can use the bosch_demos stack to try this capability in simulation.

- 1 Mac Mini

- 2 SICK scanners

- 1 Nikon D90

- 1 SCHUNK/Amtec Powercube pan-tilt unit

- 1 touch screen monitor

- 1 Logitech webcam

- 1 Bosch gyro

- 1 Bosch 3-axis acceleromoter

Like most research robots, it's frequently reconfigured: they added an additional Mac mini, Flea camera, and Videre stereo camera for some recent work with visual localization.

Bosch RTC has been releasing drivers and libraries in the bosch-ros-pkg repository. They will be presenting their approach for mapping and texture reconstruction at ICRA 2010 and hope to release the code for that as well. This approach constructs a 3D environment using the laser data, fits a surface to the resulting model, and then maps camera data onto the surfaces.

Researchers at Bosch RTC were early contributors to ROS, which is remarkable as bosch-ros-pkg is the first time Bosch has ever contributed to an open source project. They have also been involved with the ros-pkg repository to improve the SLAM capabilities that are included with ROS Box Turtle, and they have been providing improvements to a visual odometry library that is currently in the works.

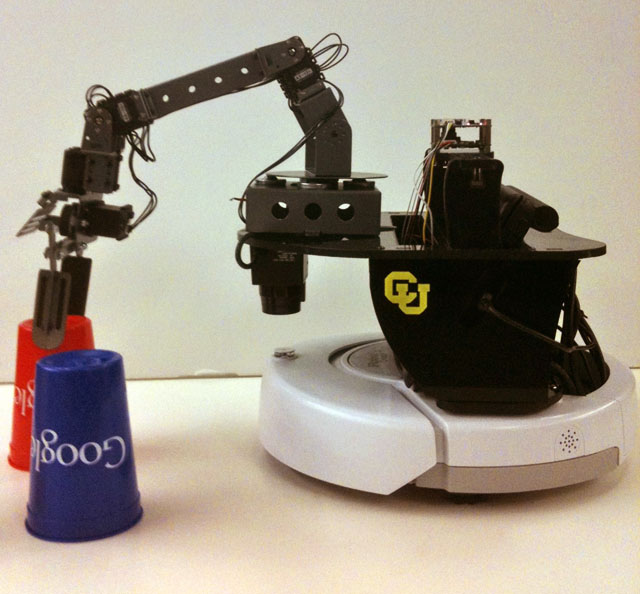

Like the Aldebaran Nao, the "Prairie Dog" platform from the Correll Lab at Colorado University is an example of the ROS community building on each others' results, and the best part is that you can build your own.

Prairie Dog is an integrated teaching and research platform built on top of an iRobot Create. It's used in the Multi-Robot Systems course at Colorado University, which teaches core topics like locomotion, kinematics, sensing, and localization, as well as multi-robot issues like coordination. The source code for Prairie Dog, including mapping and localization libraries, is available as part of the prairiedog-ros-pkg ROS repository.

Prairie Dog uses a variety of off-the-shelf robot hardware components: an iRobot Create base, a 4-DOF CrustCrawler AX-12 arm, a Hokuyo URG-04LX laser rangefinder, a Hagisonic Stargazer indoor positioning system, and a Logitech QuickCam 3000. The Correll Lab was able to build on top of existing ROS software packages, such as brown-ros-pkg's irobot_create and robotis packages, plus contribute their own in prairiedog-ros-pkg. Prairie Dog is also integrated with the OpenRAVE motion planning environment.

Starting in the Fall of 2010, RoadNarrows Robotics will be offering a Prairie Dog kit, which will give you all the off-the-shelf components, plus the extra nuts and bolts. Pricing hasn't been announced yet, but the basic parts, including a netbook, will probably run about $3500.

For more information, please see:

Photo: Prairie Dogs busy creating maps for kids and parents

Find this blog and more at planet.ros.org.