Recently in stacks Category

I would like to take a moment to announce the early-release version of the Robot Management System (RMS). RMS (Robot Management System) is a remote lab management tool designed for use with controlling ROS enabled robots from the web. RMS itself refers to a web-management system written in PHP/HTML backed by a MySQL database. RMS is written in a robot-platform independent manner allowing for the control of a variety of robots. In addition to being cross-platform, RMS allows for basic user management, interface management, and content management. RMS is broken up into two separate stacks on ROS.org:

- rms_www contains the web server code for the RMS and comes with a detailed installation tutorial -- http://www.ros.org/wiki/rms_

www - rms contains server side (ROS-side) code such as example simulation environments. As of this email, example simulation environments are available for the Kuka youBot and PR2. In addition, example launch files are provided for the WowWee Rovio and Kuka youBot for control of physical robots. PR2 examples are soon to follow. -- http://www.ros.org/wiki/rms

Who Should Use the RMS?

RMS was designed to be an easy to use, easy to mange remote lab system for use by robotic researchers and enthusiasts. Developed in PHP/HTML with a MySQL backend, the RMS itself is not considered a light-weight system; however, by using a heavier system, tools such as user management, content management, and interface management become possible.

Does the RMS Itself Require ROS to Install?

No. The RMS was designed as a standalone system that can be easily installed on UNIX based web servers. The decision was made so that it is possible to host the RMS site itself on campus or third-party web servers without the need of maintaining and installing ROS on that server. RMS will point to ROS servers which are running rosbridge v2.0 in order to control the robot.

How Does the RMS Communicate with ROS?

The RMS uses the lastest version of ros.js to communicate with ROS servers. For the ROS server to communicate back to the RMS, a server running rosbridge v2.0 should be running.

Cool Widgets! Do I Need RMS to Use Them?

No. Most of the widgets available in the system were developed as standalone widgets as part of the rosjs effort. The RMS admin panel simply wraps these widgets and provides an easy-to-use GUI to customize them to fit your needs.

Is There More to Come?

Yes! The current released version of RMS is a very early-stage release of the system. The following are just a few of the features we hope to add soon:

- User scheduling for environments/interfaces

- 2 examples and navigation widgets

- 3D Interactive Widgets

- Additional, more complex, interface examples

- Additional tutorials

- Much more!

RMS is being released in its early stages in hopes of receiving feedback early in its developments to help shape the future of the system. That being said, the current release is stable and ready for use! Frequent updates will be made and documented on its ROS.org wiki pages: http://www.ros.org/wiki/rms_

Cheers,

Russell Toris

Announcement from Johannes Meyer and Stefan Kohlbrecher of Team Hector Darmstadt to ros-users

we are happy to announce the hector_quadrotor stack. While impressive results have been demonstrated by different groups using real quadrotor UAVs and ROS in the past, to our knowledge so far there is no solution available for comprehensive simulation of quadrotor UAVs using ROS tools.

We hope to fill this gap with the hector_quadrotor stack. Using the packages provided, a quadrotor UAV can be simulated in gazebo, similar to other mobile robots. This makes it possible to record sensor data (LIDAR, RGB-D, Stereo..) and test planning and control approaches in simulation.

The stack currently contains the following packages:

- hector_quadrotor_urdf provides an URDF model of our quadrotor UAV. You can also define your own model and attach our sensors and controllers to it.

- hector_quadrotor_gazebo contains launch files for running gazebo and spawning quadrotors.

- hector_quadrotor_gazebo_plugins contains two UAV specific plugins: a simple controller that subscribes to a geometry_msgs/Twist topic and calculates the required forces and torques and a sensor plugin that simulates a barometric altimeter. Some more generic sensor plugins not specific to UAVs (IMU, Magnetic, GPS, Sonar) are provided by package hector_gazebo_plugins in the hector_gazebo stack.

- hector_quadrotor_teleop contains a node and launch files for controlling the quadrotor using a joystick or gamepad.

- hector_quadrotor_demo provides sample launch files that run the quadrotor simulation and hector_slam for indoor and outdoor scenarios.

As many users of real quadrotors can probably confirm, testing with the real thing can lead to broken hardware quickly in case something goes wrong. We hope our stack contributes to a reduction of the number of broken quadrotor UAVs in research labs around the world

We plan to convert the plugins for Gazebo 1.0.0 as soon as ROS fuerte is released.

Two demo videos (one showing a indoor scenario, the other showing a outdoor scenario) are available here:

regards,

Johannes Meyer & Stefan Kohlbrecher

Announcement from Stéphane Magnenat of ETHZ ASL to ros-users

Dear ROS community,

I am happy to announce the availability of teer. Teer, which stands for task-execution environment for robotics, is a Python library proposing the use of co-routines (called tasks) to implement executives. Teer is an alternative to executives based on state machines such as smach. Please find more details, including installation instructions, tutorials, examples, and a reference documentation on:

http://www.ros.org/wiki/executive_teer

Kind regards,

Stéphane

Announcement from Roberto Guzman from Robotnik to ros-users

Hi ROS community!

A ROS stack for the Summit robot is available. The repository includes the necessary simulation nodes, teleoperation and some autonomous navigation.

Please add the Google Code repository to the index:

http://code.google.com/p/summit-ros-stack/

Best Regards,

Robert

Announcement by Thomas Moulard (LAAS) to ros-users

Dear ROS users,

I am pleased to announce the first release of humanoid_walk.

This release provides C++ and ROS interfaces to allow humanoid robot walking trajectories generation.

Humanoid walking algorithms often take a set of footprints as input and provide feet and center of mass trajectories as output. These reference trajectories are then forwarded to the real-time controller.

This stack provides generic interfaces in order to wrap walking generation algorithm into ROS. It also provides an easy to use trajectory generator which can be used to generate motion.

The stack is available here: http://www.ros.org/wiki/humanoidwalk

...and is hosted on GitHub: http://www.github.com/laas/humanoidwalk

Bug reports, comments and patches are welcomed!

Announcement to ros-users from João Quintas of University of Coimbra

Available at isr-uc-ros-pkg repository through isr-uc-ros-pkg.googlecode.com.

This stack is intended to implement auction-based protocols for MRS.

Best regards,

João Quintas

Mobile Robotics Laboratory

Instituto de Sistemas e Robotica

cross-posted from willowgarage.com

In a little less than three months, Yiping Liu from Ohio State University made a significant update to the camera pose stack by making it possible to calibrate cameras that are connected by moving joints, and storing the result to a URDF file. The camera pose stack captures both the relative poses of the cameras and the state of the joints between the cameras. The optimizer can run over multiple camera poses and multiple joint states.

The goal was to calibrate multiple RGB cameras, such as the Microsoft Kinect, Prosilicas, webcams, and other mounted cameras, on a robot, relative to other cameras. The results are automatically added to the URDF of the robot.

Yiping set up a PR2 with a Kinect camera mounted on its head to demonstrate the calibration between the onboard camera and statically mounted cameras. The PR2 was directed close enough to the statically mounted camera and store the captured checker board pattern. The internal optimizer can produce better results with accumulated measurements.

In the other use case, the PR2 looks at itself in a previously mapped space. Yiping built a simple GUI in cameraposetoolkits for choosing the camera to calibrate. The PR2 moved in front of the selected camera and calibration was performed. The package publishes all calibrated camera frames to TF in realtime. You can watch Yiping's camera calibration tests on his video.

To use the camera pose toolkits in your work and to find the latest update, check the ROS.org site. Yiping also created tutorials with helpful information about calibrating multiple cameras.

crossposted from willowgarage.com

This Spring, Armin Hornung from the Humanoid Robots Lab at University of Freiburg in Germany visited us to work on 3D representations for manipulation and navigation in unstructured environments.

Armin made major improvements to the OctoMap 3D mapping library. Scan insertions are now twice as fast as before for real-time map updates and tree traversals are now possible in a flexible and efficient manner using iterators. The new ROS interface provides conversions from most common ROS datatypes, and Octomap server was updated for incremental 3D mapping.

Armin also worked on creating a dynamically updatable collision map for tabletop manipulation. The collider package uses OctoMap to provide map updates from laser and dense stereo sensors at a rate of about 10Hz.

Finally, Armin extended the ideas from collider to allow for navigation in complex three-dimensional environments. The 3d_navigation stack enables navigation with untucked arms for various mobile manipulation tasks such as docking the robot at a table, carrying trays, or pick and place tasks. The full kinematic configuration of the robot is checked against 3D collisions in a dynamically-built OctoMap in an efficient manner. The new planners based on SBPL exploit the holonomic movements of the base.

For more details, please see Armin's presentation below (download PDF) or check out octomap_mapping, collider, or the 3d_navigation stack at ROS.org. There is also a presentation and demo of the system scheduled at the PR2 workshop coming up at IROS 2011. Armin's improvements to OctoMap are part of OctoMap 1.2 as well as the latest octomap_mapping stack.

I would like to announce the release of dynamixel_motor stack. This

stack allows you to control the full range of Robotis Dynamixel

motors, including AX, RX, EX and MX series. The code is not new, it's

a combination of packages relating to Dynamixel motors from ua-ros-pkg

neatly packaged into a single stack. Some people have already used

that code in ax12_controller_core and ax12_driver_core packages.

Documentation is available at http://www.ros.org/wiki/

Ubuntu packages for ROS Diamondback should be available shortly.

Thanks,

Anton

Development on our OpenNI/ROS integration for the Kinect and PrimeSense Developers Kit 5.0 device continues as a fast pace. For those of you participating in the contest or otherwise hacking away, here's a summary of what's new. As always, contributions/patches are welcome.

Development on our OpenNI/ROS integration for the Kinect and PrimeSense Developers Kit 5.0 device continues as a fast pace. For those of you participating in the contest or otherwise hacking away, here's a summary of what's new. As always, contributions/patches are welcome.

Driver Updates: Bayer Images, New point cloud and resolution options via dynamic_reconfigure

Suat Gedikli, Patrick Mihelich, and Kurt Konolige have been working on the low-level drivers to expose more of the Kinect features. The low-level driver now has access to the Bayer pattern at 1280x1024 and we're working on "Fast" and "Best" (edge-aware) algorithms for de-bayering.

We've also integrated support for high-resolution images from avin's fork, and we've added options to downsample the image to lower resolutions (QVGA, QQVGA) for performance gains.

You can now select these resolutions, as well as different options for the point cloud that is generated (e.g. colored, unregistered) using dynamic_reconfigure.

Here are some basic (unscientific) performance stats on a 1.6Ghz i7 laptop:

- point_cloud_type: XYZ+RGB, resolution: VGA (640x480), RGB image_resolution: SXGA (1280x1024)

- XnSensor: 25%, openni_node: 60%

- point_cloud_type: XYZ+RGB, resolution: VGA (640x480), RGB image_resolution: VGA (640x480)

- XnSensor: 25%, openni_node: 60%

- point_cloud_type: XYZ_registered, resolution: VGA (640x480), RGB image_resolution: VGA (640x480)

- XnSensor: 20%, openni_node: 30%

- point_cloud_type: XYZ_unregistered, resolution: VGA (640x480), RGB image_resolution: VGA (640x480):

- XnSensor: 8%, openni_node: 30%

- point_cloud_type: XYZ_unregistered, resolution: QVGA (320x240)

- XnSensor: 8%, openni_node: 10%

- point_cloud_type: XYZ_unregistered, resolution: QQVGA (160x120)

- XnSensor: 8%, openni_node: 5%

- No client connected (all cases)

- XnSensor: 0%, openni_node: 0%

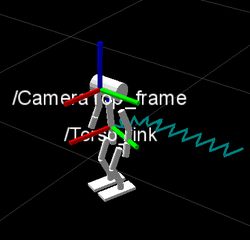

NITE Updates: OpenNI Tracker, 32-bit support in ROS

Thanks to Kei Okada and the Tokyo University JSK Lab, the Makefile for the NITE ROS package properly detects your architecture (32-bit vs. 64-bit) and downloads the correct binary.

Tim Field put together a ROS/NITE sample called openni_tracker for those of you wishing to:

- Figure out how to compile OpenNI/NITE code in ROS

- Export the skeleton tracking as TF coordinate frames.

The sample is a work in progress, but hopefully it will give you all a head start.

Point Cloud → Laser Scan

Tully Foote and Melonee Wise have written a pointcloud_to_laserscan package that converts the 3D data into a 2D 'laser scan'. This is useful for using the Kinect with algorithms that require laser scan data, like laser-based SLAM.

OpenNI PCL

Radu Rusu is working on an openni_pcl package that will allow you to better use the Point Cloud Library with the OpenNI driver. This package currently contains a point cloud viewer as well as a nodelet-based launch files for creating a voxelgrid. More launch files are on the way.

New tf frames

There are new tf frames that you can use, which simplifies interaction in rviz (for those not used to Z-forward). The new frames also bring the driver in conformance with REP 103.

These frames are: /openni_camera, /openni_rgb_frame, /openni_rgb_optical_frame (Z forward), /openni_depth_frame, /openni_depth_optical_frame. (Z forward). For more info, see Tully's ros-kinect post.

Roadmap

We're getting close to the point where we will be breaking the ni stack up into smaller pieces. This will keep the main driver lightweight, while still enabling libraries to be integrated on top. We will also be folding more of PCL's capabilities soon.

This morning OpenNI was launched, complete with open source drivers for the PrimeSense sensor. We are happy to now announce that we have completed our first integrate with OpenNI, which will enable users to get point cloud data from a PrimeSense device into ROS. We have also enabled support for the Kinect sensor with OpenNI.

This morning OpenNI was launched, complete with open source drivers for the PrimeSense sensor. We are happy to now announce that we have completed our first integrate with OpenNI, which will enable users to get point cloud data from a PrimeSense device into ROS. We have also enabled support for the Kinect sensor with OpenNI.

This new code is available in the ni stack. We have low-level, preliminary integration of the NITE skeleton and hand-point gesture library. In the coming days we hope to have the data accessible in ROS as well.

For more information, please see ros.org/wiki/ni.

cross-posted from willowgarage.com

Nobody likes to wait, even for a robot. So when a personal robot searches for an object to deliver, it should do so in a timely manner. To accomplish this, Feng Wu from the University of Science and Technology of China spent his summer internship developing new techniques to help PR2 select which sensors to use in order to more quickly find objects in indoor environnments.

Robots like PR2 have several sensors, but they can be generally categorized into two types: wide sensing and narrow sensing. Wide sensing covers larger areas and greater distances than narrow sensing, but the data may be less accurate. On the other hand, narrow sensing can use more power and take more time to collect and analyze. Feng worked on planning techniques to balance the tradeoffs between these two types of sensing actions, gathering more information while minimizing the cost.

The techniques involved the use of the Partially Observable Markov Decision Process. POMDP provides an ideal mathematical framework for modeling wide and narrow sensing. The sensing abilities and uncertainty of sensing data are modeled in an observation function. The cost of sensing actions can be defined in a reward function. The solution balances the costs of the sensing action and the rewards (i.e., the amount of information gathered).

For example, one part of the search tree might represent the following: "If I move to the middle of the room, look around and observe clutter to my left, then I will be very sure that there is a bottle to the left, moderately sure that there is nothing in front of me, and completely unsure about the rest of the room." When actually performing tasks in the world, the robot will receive observations from its sensors. These observations will be used to update its current beliefs. Then the robot can plan once again, using the updated beliefs. Planning in belief space allows making tradeoffs such as: "I'm currently uncertain about where things are, so it's worth taking some time to move to the center of the room to do a wide scan. Then I'll have enough information to choose a good location toward which to navigate."

For more information, you can read Feng's slides. You can also check out the find_object stack on ROS.org.

crossposted from willowgarage.com

Simple trial and error is one the most common ways that we learn how to perform a task. While we can learn a great deal by watching and imitating others, it is often through our own repeated experimentation that we learn how -- or how not -- to perform a given task. Peter Pastor, a PhD student from the Computational Learning and Motor Control Lab at the University of Southern California (USC), has spent his last two internships here working on helping the PR2 to learn new tasks by imitating humans, and then improving that skill through trial and error.

Last summer, Peter's worked focused on imitation learning. The PR2 robot learned to grasp, pour, and place beverage containers by watching a person perform the task a single time. While it could perform certain tasks well with this technique, many tasks require learning about information that cannot necessarily be seen. For example, when you open a door, the robot cannot tell how hard to push or pull. This summer, Peter extended his work to use reinforcement learning algorithms that enable the PR2 to improve its skills over time.

Peter focused on teaching the PR2 two tasks with this technique: making a pool shot and flipping a box upright using chopsticks. With both tasks, the PR2 robot first learned the task via multiple demonstrations by a person. With the pool shot, the robot was able to learn a more accurate and powerful shot after 20 minutes of experimentation. With the chopstick task, the robot was able to improve its success from 3% to 86%. To illustrate the difficulty of the task, Peter conducted an informal user study in which 10 participants were performed 20 attempts at flipping by box by guiding the robot's gripper. Their success rate was only 15%.

Peter used Dynamic Movement Primitives (DMPs) to compactly encode movement plans. The parameters of these DMPs can be learned efficiently from a single demonstration by guiding the robot's arms. These parameters then become the initialization of the reinforcement learning algorithm that updates the parameters until the system has minimized the task-specific cost function and satisfied the performance goals. This state-of-the-art reinforcement learning algorithm is called Policy Improvement using Path Integrals (PI^2). It can handle high dimensional reinforcement learning problems and can deal with almost arbitrary cost functions.

Programming robots by hand is challenging, even for experts. Peter's work aims to facilitate the encoding of new motor skills in a robot by guiding the robot's arms, enabling it to improve its skill over time through trial and error. For more information about Peter's research with DMPs, see "Learning and Generalization of Motor Skills by Learning from Demonstration" from ICRA 2009. The work that Peter did this summer has been submitted to ICRA 2011 and is currently under review. Check out his presentation slides below (download PDF). The open source code has been written in collaboration with Mrinal Kalakrishnan and is available in the ROS policy_learning stack.

crossposted from willowgarage.com

Helen Oleynikova, a student at Olin College of Engineering, spent her summer internship at Willow Garage working on improving visual SLAM libraries and and integrating them with ROS. Visual SLAM is a useful building block in robotics with several applications, such as localizing a robot and creating 3D reconstructions of an environment.

Visual SLAM uses camera images to map out the position of a robot in a new environment. It works by tracking image features between camera frames, and determining the robot's pose and the position of those features in the world based on their relative movement. This tracking provides an additional odometry source, which is useful for determining the location of a robot. As camera sensors are generally cheaper than laser sensors, this can be a useful alternative or complement to laser-based localization methods.

In addition to improving the VSLAM libraries, Helen put together documentation and tutorials to help you integrate Visual SLAM with your robot. VSLAM can be used with ROS C Turtle, though it requires extra modifications to install as it requires libraries that are being developed for ROS Diamondback.

To find out more, check out the vslam stack on ROS.org. For detailed technical information, you can also check out Helen's presentation slides below.

One of the new features in ROS C Turtle was a critical component of our recent "hackathons." When fetching a drink out of a refrigerator, for example, a robot has to perform numerous tasks such as grasping a handle, opening a door, and scanning for drinks. These tasks have to be carefully orchestrated to deal with unexpected conditions and errors. We've previously used complex task-planning systems to orchestrate these actions, but our developers and researchers needed something more rapid for prototyping robot behaviors.

One of our interns came up with an answer. SMACH ("State MACHine", pronounced "smash") is a task-specification and coordination architecture that was developed by Jonathan Bohren as part of his second internship here at Willow Garage. Jonathan came to us from the GRASP Lab at University of Pennsylvania and is now headed off to the Laboratory for Computational Sensing and Robotics (LCSR) at Johns Hopkins. During his extended stay here, SMACH was used in a variety of PR2 projects.

SMACH was first used in the rewrite of our plugging and doors code, then further refined during our billiards, cart-pushing, and drink-fetching hackathons. In all of these projects, the ability to code these behaviors quickly was critical, as was the ability to create more robust behaviors for dealing with failure.

SMACH is a ROS-independent Python library, so it can be used with and without ROS infrastructure. It comes with important developer tools like a visualizer for the current SMACH plan and introspection tools to monitor the internal state and data flow. There are already many SMACH tutorials that can be found on the ROS wiki, and we hope to see SMACH used to produce many more cool robotics apps!

cross posted from willowgarage.com

Our Gazebo engineers have been hard at work bringing improvements to the Gazebo user interface and the simulation quality of the PR2 robot. These improvements will be available with the forthcoming release of ROS C-Turtle. Included will be the option to create simple shapes (boxes, spheres, and cylinders) and light sources (point, spot, and directional) within the GUI, while a simulation is running. This capability lets a developer dynamically change and even create a simulation environment on the fly. These modifications can be saved to file, and reloaded as needed.

You will be able to use the mouse to select and manipulate every object in the simulation. Once an object is selected, three rings and six boxes appear around the object. The rings allow you to rotate the object in all three axes, and the boxes provide a mechanism for translation. This manipulation interface provides a convenient and intuitive tool with which to modify a simulation. It's also possible to pause and modify the world by pressing the space bar, or simply selecting the pause button.

In addition to these GUI improvements, more ROS service and topic interfaces will be added in the new Gazebo release. For details on the proposed Gazebo ROS API, please check out this tutorial.

The upcoming version of Gazebo will also include improvements to the model of the PR2 robot. With the help of some graphic artists, we've added detailed meshes and textures to the PR2 model. These new meshes not only improve the appearance of the PR2 in simulation, but also improve the way sensors such as laser range finders interact with the robot. These new details, along with GPU shaders, create a realistic simulation of the PR2.

Gazebo's enhanced representations of the real world demonstrate the power of developing and debugging algorithms using Gazebo. Check out the videos below to see the new features in action!

We just released robot_model 1.1.0. This is an unstable development branch to prepare new features for the next ROS distribution. The most exciting new feature is the URDF to COLLADA conversion tool. For more details, check out the stack documentation and change list.

I Heart Robotics has released a rovio stack for ROS, which contains a controller, a joystick teleop node, and associated launch files for the WowWee Rovio. There are also instructions and configuration for using the probe package from brown-ros-pkg to connect to Rovio's camera.

I Heart Robotics has released a rovio stack for ROS, which contains a controller, a joystick teleop node, and associated launch files for the WowWee Rovio. There are also instructions and configuration for using the probe package from brown-ros-pkg to connect to Rovio's camera.

You can download the rovio stack from iheart-ros-pkg:

http://github.com/IHeartRobotics/iheart-ros-pkg

As the announcement notes, this is still a work in progress, but this release should help other Rovio hackers participate in adding new capabilities.

From Armin Hornung at University of Freiburg:

From Armin Hornung at University of Freiburg:Version 0.11 of Freiburg's "nao" stack has just been released, providing a few minor fixes for v0.1. Files are available packaged at:

http://code.google.com/p/

or via source checkout from:

https://alufr-ros-pkg.

All code is fully compatible with ROS 1.0. On the robot side, this will probably be the last release compatible with the NaoQI API 1.3.17, as the new version 1.6.0 became available recently.

Additionally, extended documentation for all nodes in the stack is now available at http://www.ros.org/wiki/nao/.

Best regards,

Armin

One of the new libraries we're working on for ROS is a web infrastructure that allows you to control the robot and various applications via a normal web browser. The web browser is a powerful interface for robotics because it is ubiquitous, especially with the availability of fully-featured web browsers on smart phones.

The web_interface stack for ROS allows you to connect to a Web-enabled ROS robot, see through its cameras, and launch applications. Beneath the hood is a Javascript library that is capable of sending and receiving ROS messages, as well as calling ROS services.

With just a couple of clicks from any web browser, you can start and calibrate a robot. We've written applications for basic capabilities like joystick control and tucking the arms of the PR2 robot. We've also written more advanced applications that let you select the locations of outlets on a map for the PR2 to plug into. We hope to see many more applications available through this interface so that users can control their robot easily with any web-connected device.

We're also developing 3D visualization capabilities based on the O3D extension that is available with upcoming versions of Firefox and Chrome. This 3D visualization environment is already being tested as a user interface for grasping objects that the robot sees.

All of these capabilities are still under active development and not recommended for use yet, but we hope that they will become useful platform capabilities in future releases.

Over the past month we've been working hard on PR2's capability to autonomously recharge itself. We developed a new vision-based approach for PR2 to locate a standard outlet and plug itself in, and integrated this capability into a web application you can run from your browser. We've developed this code to be compatible with ROS Box Turtle.

The following stacks have been released for Box Turtle:

- executive_python 0.1.0

- pr2_apps 0.2.1

- pr2_plugs 0.2.1

- pr2_common_actions 0.1.3

- pr2_robot 0.3.7

- pr2_doors 0.2.6

- pr2_navigation 0.1.1

- pr2_navigation_apps 0.1.1

- web_interface 0.3.15

Change lists

Bosch's Research and Technology Center (RTC) has a Segway-RMP based robot that they have been using with ROS for the past year to do exploration, 3D mapping, and telepresence research. They recently released version 0.1 of their exploration stack in the bosch-ros-pkg repository, which integrates with the ROS navigation stack to provide 2D-exploration capabilities. You can use the bosch_demos stack to try this capability in simulation.

- 1 Mac Mini

- 2 SICK scanners

- 1 Nikon D90

- 1 SCHUNK/Amtec Powercube pan-tilt unit

- 1 touch screen monitor

- 1 Logitech webcam

- 1 Bosch gyro

- 1 Bosch 3-axis acceleromoter

Like most research robots, it's frequently reconfigured: they added an additional Mac mini, Flea camera, and Videre stereo camera for some recent work with visual localization.

Bosch RTC has been releasing drivers and libraries in the bosch-ros-pkg repository. They will be presenting their approach for mapping and texture reconstruction at ICRA 2010 and hope to release the code for that as well. This approach constructs a 3D environment using the laser data, fits a surface to the resulting model, and then maps camera data onto the surfaces.

Researchers at Bosch RTC were early contributors to ROS, which is remarkable as bosch-ros-pkg is the first time Bosch has ever contributed to an open source project. They have also been involved with the ros-pkg repository to improve the SLAM capabilities that are included with ROS Box Turtle, and they have been providing improvements to a visual odometry library that is currently in the works.

Efforts are underway to develop a navigation stack for the arms analogous to the navigation stack for mobile bases. This includes a wide range of libraries that can be used for collision detection, trajectory filtering, motion planning for robot manipulators, and specific implementations of kinematics for the PR2 robot.

As part of this effort, Willow Garage is happy to announce the release of our first set of research stacks for arm motion planning. These stacks include a high-level arm_navigation stack, as well as general-purpose stacks called motion_planners, motion_planning_common, motion_planning_environment, motion_planning_visualization, kinematics, collision_environment, and trajectory_filters. There are also several PR2-specific stacks. All of these stacks can be installed on top of Box Turtle:

Significant contributions were made to this set of stacks by our collaborators and interns over the past two years:

- Ioan Åžucan (from Lydia Kavraki's lab at Rice) developed the initial version of this framework while an intern at Willow Garage over the summer of 2008 and 2009 and has continued to contribute significantly since. His contributions include the OMPL planning library that contains a variety of probabilistic planners including ones developed by Lydia Kavraki's lab over the years.

- Maxim Likhachev's group at Penn (including Ben Cohen, who was a summer intern at Willow Garage in 2009) contributed the SBPL planning library that incorporates the latest techniques in search based motion planning.

- Mrinal Kalakrishnan from USC developed the CHOMP motion planning library while he was an intern at Willow Garage in 2009. This library is based on the work of Nathan Ratliff, Matthew Zucker, J. Andrew Bagnell and Siddhartha Srinivasa.

Additional contributions also came from Radu Rusu, Matei Ciocarlei and Kaijen Hsiao (from Willow Garage) and Rosen Diankov (from CMU).

These stacks are currently classified as research stacks, which means that they have unstable APIs and are expected to change. We expect the core libraries to reach maturity fairly quickly and be released as stable software stacks, while other stacks will continue to incorporate the latest in motion planning research from the world-wide robotics community. We encourage the community to try them out to provide feedback and contribute. A good starting point for is the arm_navigation wiki page. There is also a growing list of tutorials.

Here are some blog posts of demos that show these stacks in use:

- JSK demo (pr2_kinematics)

- Robot replugged (pr2_kinematics)

- Hierarchical planning (OMPL, move_arm)

- Towers of Hanoi (move_arm)

- Detecting tabletop objects (move_arm)

You can also watch the videos below that feature the work of Ben Cohen, Mrinal Kalakrishnan, and Ioan Åžucan.

The individual stack and package wiki pages have descriptions of the current status. We have undergone a ROS API review for most packages, but the C++ APIs have not yet been reviewed. We encourage you to use the ROS API -- we will make our best effort to keep this API stable. The C++ APIs are being actively worked on (see the Roadmap on each Wiki page for more details) and we expect to be able to stabilize a few of them in the next release cycle.

Please feel free to point out bugs, make feature requests, and tell us how we can do better. We particularly encourage developers of motion planners to look at integrating their motion planners into this effort. We have made an attempt to modularize the architecture of this system so that components developed by the community can be easily plugged in. We also encourage researchers who may use these stacks on other robots to get back to us with feedback about their experience.

Best Regards,

Your friendly neighborhood arm navigation development team

Sachin Chitta, Gil Jones (Willow Garage)

Ioan Sucan (Rice University)

Ben Cohen (University of Pennsylvania)

Mrinal Kalakrishnan (USC)

Videos

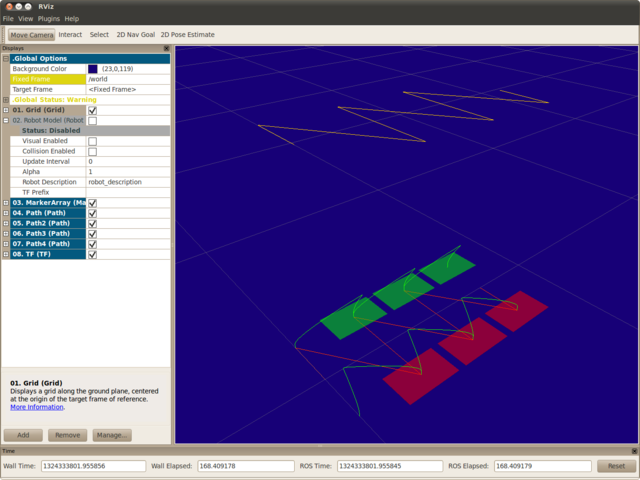

In recent posts, we've showcased the rviz 3-D visualizer and navigation stack, two of the many useful libraries and tools included in the ROS Box Turtle distribution. Now, we'd like to highlight what we're developing for future distribution releases.

The first is the PR2 calibration stack. The PR2 has two stereo cameras, two forearms cameras, one high-resolution camera, and both a tilting and base laser rangefinder. That's a lot of sensor data to combine with the movement of the robot.

The PR2 calibration stack recently made our lives simpler when updating our plugging-in code. Eight months ago, without accurate calibration between the PR2 kinematics and sensors, the original plugging-in code applied a brute force spiralling approach to determining an outlet's position. Our new calibration capabilities give the PR2 the new-found ability to plug into an outlet in one go.

The video above shows how we're making calibration a simpler, more automated process for researchers. The PR2 robot can calibrate many of its sensors automatically by moving a small checkerboard through various positions in front of its sensors. You can start the process before lunch, and by the time you get back, there's a nicely calibrated robot ready to go. We're also working on tools to help researchers understand how well each individual sensor is calibrated.

The PR2 calibration stack is still under active development, but can be used by PR2 robot users with Box Turtle. In the future, we hope this stack will become a mature ROS library capable of supporting a wide variety of hardware platforms.

Now that the ROS Box Turtle release is out, we'd like to highlight some of its core capabilities, and share some of the features that are in the works for the next release.

First up is the ROS Navigation stack, perhaps the most broadly used ROS library. The Navigation stack is in use throughout the ROS community, running on robots both big and small. Many institutions, including Stanford University, Bosch, Georgia Tech, and the University of Tokyo, have configured this library for their own robots.

The ROS Navigation stack is robust, having completed a marathon -- 26.2 miles -- over several days in an indoor office environment. Whether the robot is dodging scooters or driving around blind corners, the Navigation stack provides robots with the capabilities needed to function in cluttered, real-world environments.

You can watch the video above for more details about what the ROS Navigation stack has to offer, and you can read documentation and download the source code at: ros.org/wiki/navigation.

We are excited to announce that our first ROS Distribution, "Box Turtle", is ready for you to download. We hope that regular distribution releases will increase the stability and adoption of ROS.

A ROS Distribution is just like a Linux distribution: it provides a broad set of libraries that are tested and released together. Box Turtle contains the many 1.0 libraries that were recently released. The 1.0 libraries in Box Turtle have stable APIs and will only be updated to provide bug fixes. We will also make every effort to maintain backwards-compatibility when we do our next release, "C Turtle".

There are many benefits of a distribution. Whether you're a developer trying to choose a consistent set of ROS libraries to develop against, an instructor needing a stable platform to develop course materials against, or an administrator creating a shared install, distributions make the process of installing and releasing ROS software a more straightforward process. The Box Turtle release will allow you to easily get bug fixes, without worry about new features breaking backwards compatibility.

With this Box Turtle release, installing ROS software is easier than ever before with Ubuntu debian packages. You can now "apt-get install" ROS and its many libraries. We've been using this capability at Willow Garage to install ROS on all of our robots. Our plugs team was able to write their revamped code on top of the Box Turtle libraries, which saved them time and provided greater stability.

Box Turtle includes two variants: "base" and "pr2". The base variant contains our core capabilities and tools, like navigation and visualization. The pr2 variant comes with the libraries we use on our shared installs for the PR2, as well as the libraries necessary for running the PR2 in simulation. Robots vary much more than personal computers, and we expect that future releases will include variants to cover other robot hardware platforms.

We have new installation instructions for ROS Box Turtle. Please try them out and let us know what you think!

ROS 1.0 has been released!

We also released pr2_simulator 1.0 today, which completes our 1.0 release cycle.

To put this all in context, getting to 1.0 meant completing:

- 203 ROS software tutorials

- 29 ROS Stacks at 1.0 status, which contain a total of 186 ROS Packages

- 21 Use Cases, requiring well over a hundred user studies

We also launched the ROS.org community site, including revamped wiki, blog, and faster SVN servers.

Development on ROS began a little over two years ago. There have been many changes since those early days, and what started as a little project with a couple of developers has blossomed into a vibrant community supporting a wide variety of robots, big and small, academia and industry.

We hope that this 1.0 release will help the community even more and that you all will continue to participate and contribute.

While ROS 1.0 is an important step, we're even more excited by the many other libraries that have reached 1.0, including:

- Core libraries: common 1.0, common_msgs, diagnostics 1.0, geometry 1.0, robot_model 1.0

- Visualization tools: visualization 1.0, visualization_common 1.0

- Drivers: driver_common 1.0, imu_drivers 1.0, joystick_drivers 1.0, laser_drivers 1.0, sound_drivers 1.0

- Navigation: navigation 1.0, slam_gmapping 1.0

- Simulation: physics_ode 1.0, simulator_stage 1.0, simulator_gazebo 1.0, pr2_simulator 1.0

- Perception: image_common 1.0, image_pipeline 1.0, laser_pipeline 1.0, vision_opencv 1.0

- PR2: pr2_common 1.0, pr2_controllers 1.0, pr2_gui 1.0, pr2_mechanism 1.0, pr2_power_drivers 1.0, pr2_ethercat_drivers 1.0

We look forward to your feedback and improvements on these building blocks.

-- your friendly neighborhood ROS 1.0 team

Changes

The focus of changes for this release was on bug fixes, cleanup, and resolving any remaining API issues.

There was one deprecated API removed in this final release, and will require migration for code not yet updated:

* roscpp: Removed deprecated 3-argument versions of param::get() and NodeHandle::getParam()

rospy now also warns on illegal names. We have been introducing more name strictness in ROS. ROS auto-generates source code of messages and services. The name strictness ensures that source code can be validly generated for these types. There are also potential features in our roadmap that will require name strictness as well.

For a complete list of changes in this release, please see the change list.

In the coming weeks, there will be a flurry of 1.0 stack releases. The first of these are common 1.0, common_msgs 1.0, physics_ode, sound_drivers 1.0 and visualization_common 1.0.

We are making these releases in advance of our first ROS "Distribution" (Box Turtle), which will package our stable stacks together. Much like a Linux distribution (e.g. Ubuntu's Karmic Koala), the software stacks in the distribution will have a stable set of APIs for developers to build upon. We will release patch updates into the distribution, but otherwise keep these stacks stable. We've heard the needs from the community for a stable version of libraries to build upon, whether it be for research lab setups or classroom teaching, and we hope that these well-documented and well-tested distributions will fit that need. We will separately continue work on development of new features so that subsequent distributions will have exciting new capabilities to try out.

We're very excited to be nearing the end of our milestone 3 process. These 1.0 releases represent several months of coding, user testing, and documentation, so that the ROS community can use a broad set of stable robotics libraries to build upon. We appreciate the many contributions the community has made to these releases, from code, to bug reports, to participating in user tests. These releases also build upon many thirdparty robotics-related open source libraries.

For these releases, you will find links to "releases" and "change list", where you can find information about downloading these releases as well as information on what has changed:

Links to download/SVN tags

- http://www.ros.org/wiki/common/Releases

- http://www.ros.org/wiki/common_msgs/Releases

- http://www.ros.org/wiki/physics_ode/Releases

- http://www.ros.org/wiki/sound_drivers/Releases

- http://www.ros.org/wiki/visualization_common/Releases

Change Lists

- http://www.ros.org/wiki/common/ChangeList

- http://www.ros.org/wiki/common_msgs/ChangeList

- http://www.ros.org/wiki/physics_ode/ChangeList

- http://www.ros.org/wiki/sound_drivers/ChangeList

- http://www.ros.org/wiki/visualization_common/ChangeList

In most cases, the 1.0 releases will only contain minor updates from previous releases.

NOTE: not every stack in the final distribution will attain 1.0 status. We are reserving the 1.0 label for libraries that we believe to be the most mature and stable.

-- your friendly neighborhood ROS team

Find this blog and more at planet.ros.org.